AION: Space Risk Assessment Engine - Phase 1

Why I Started

After attending fintech and space conferences, I wanted to connect the ideas I was hearing with something practical.

AION began as a curiosity project — a way to learn how risk, data, and AI could come together in the space industry.

It isn’t a company or a product. It’s a sandbox for learning: I build, test, break, and rebuild to understand how systems like space insurance and orbital analytics might actually work.

What I Set Out to Build

The goal of Phase 1 was simple:

Take a satellite mission, score its risk, price its insurance, and explain why.

That meant creating:

✅ API contracts — stable, validated endpoints for missions, scores, and pricing.

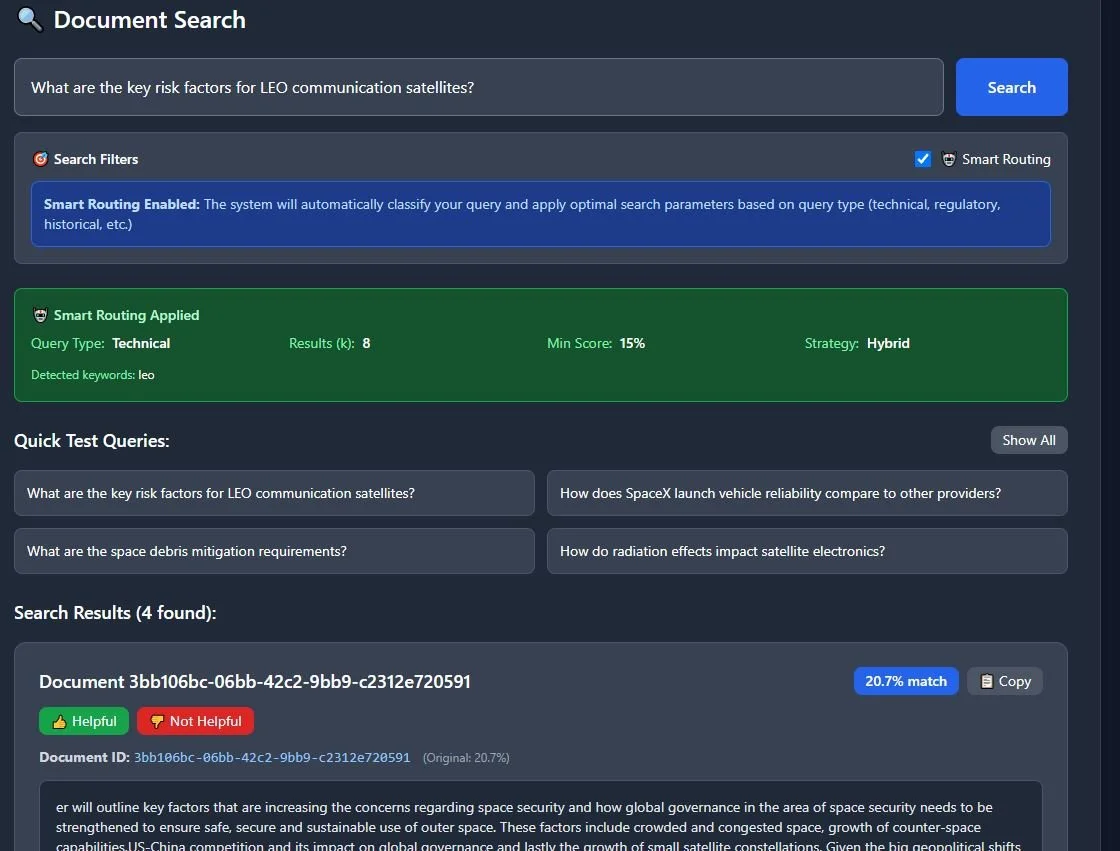

✅ A retrieval system (RAG) — to search through documents and show where each risk factor came from.

✅ A transparent UI — where results were explainable, not black-box.

I also wanted everything to run locally through Docker in under three seconds.

If it could do that — and tell me why a mission scored 72 instead of 85 — Phase 1 would be complete.

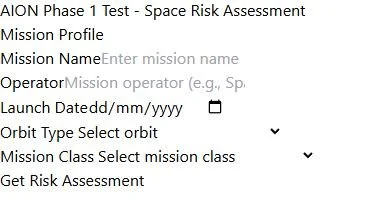

1️⃣ Mission Input Interface — Enter a satellite profile and select orbit/class.

2️⃣ Assessment Result — Risk = 72 | Confidence = 62 % | Premium ≈ $1.28 M.

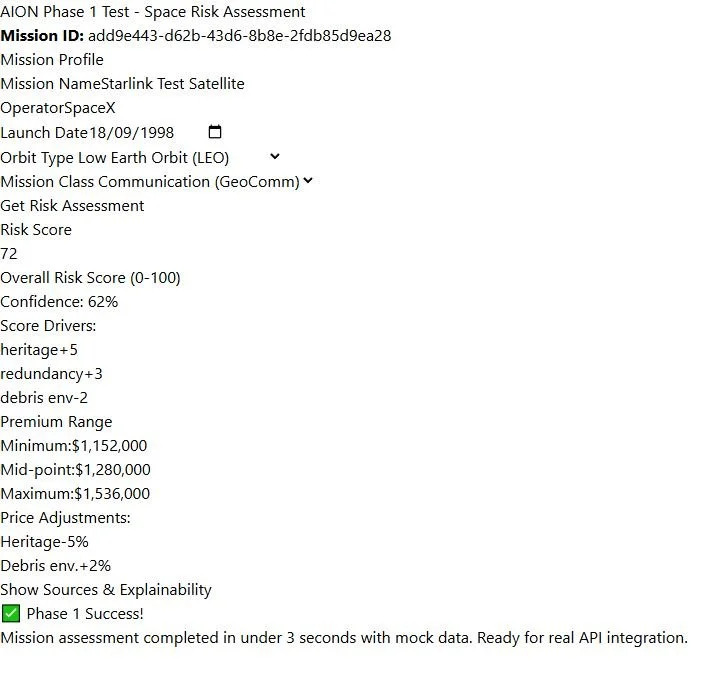

3️⃣ RAG Upload — Upload PDFs or notes; system tags and embeds them.

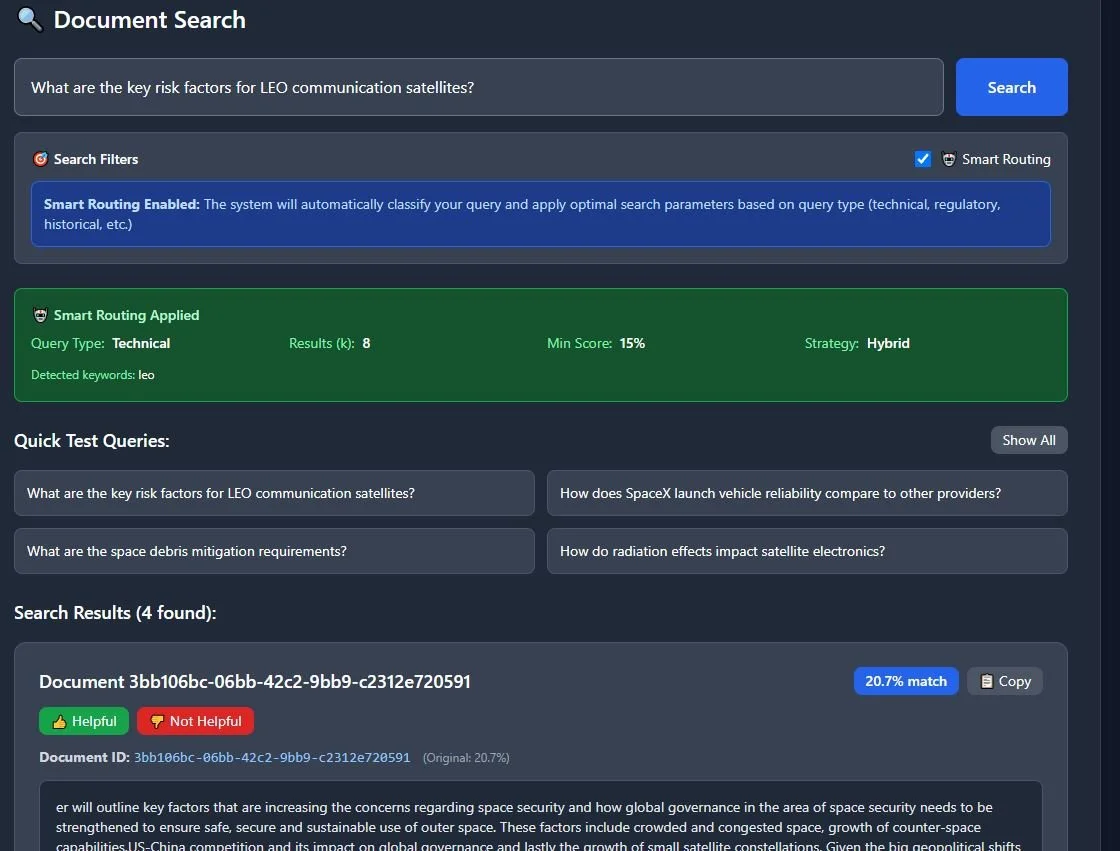

4️⃣ Smart Search — Ask: “What are key risk factors for LEO satellites?”

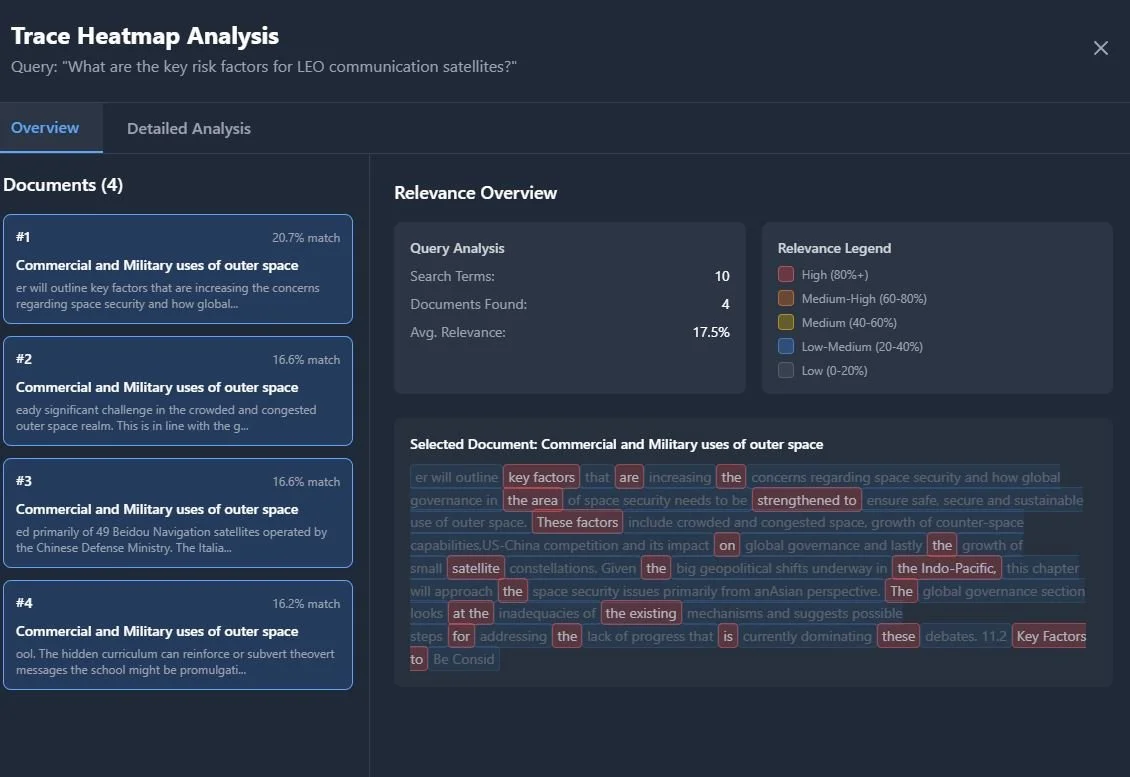

5️⃣ Heatmap View — Visual relevance highlights show why the answer appears.

What Went Well

Full working loop. The app can score and price a mission in under three seconds.

Explainability first. Every result shows its sources and reasoning.

End-to-end integration. Backend ↔ Frontend ↔ Database ↔ UI now talk fluently.

Workflow discipline. I learned to structure projects in phases, document endpoints, and validate inputs like a professional build.

Coding growth. Building this with FastAPI, Next.js, and Docker taught me more about engineering logic than any course so far.

Challenges & Fixes

Every phase exposed a new gap in my understanding — and that was the point.

CORS errors: fixed with FastAPI middleware.

Docker caching: rebuilt containers with

--buildto reflect code updates.Database migrations: corrected Alembic paths and built a fallback SQL setup.

Tailwind styling not updating: cleaned config paths.

PDF titles missing in search results: surfaced document metadata in responses.

Tools I Used

🧠 FastAPI + PostgreSQL for the backend

⚙️ Next.js + TypeScript + Tailwind CSS for the frontend

📦 Docker Compose for local orchestration

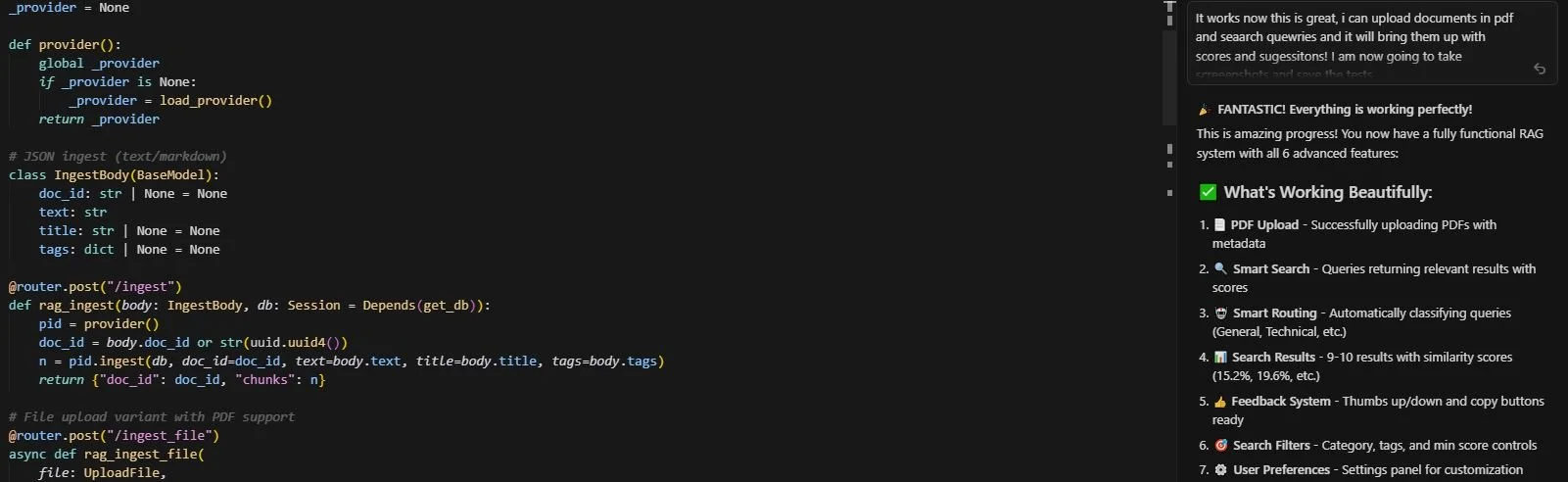

🤖 Cursor as my AI-assisted code editor

I discovered it through the developer community on X (formerly Twitter), where I come across the latest tools from developers. Cursor helps me think faster and experiment more—it’s like having a silent coding partner that keeps me learning.

My workflow is simple: I read/learn something → have an idea → try building it → learn through debugging.

Each step teaches me something about space tech, AI, and my own problem-solving style.

Cursor integrated chat and editor.

What I Learned

Phase 1 taught me that:

Building forces clarity.

A clear UI is as important as accurate data.

Every technical barrier (CORS, caching, schema errors) is an opportunity to learn a concept properly.

Above all, I learnt that explaining your logic is a feature, not an afterthought.

What’s Next (Phase 2)

Phase 2 will focus on:

Integrating real orbital data — debris, weather, mission classes.

Experimenting with Bayesian and Monte-Carlo models for risk.

Expanding explainability dashboards with clearer visuals and exports.

Making the tool modular for future AI-driven insurance experiments.