AION: Module 2 — From Black Box to Glass Box

The Problem: Calculators Aren't Enough

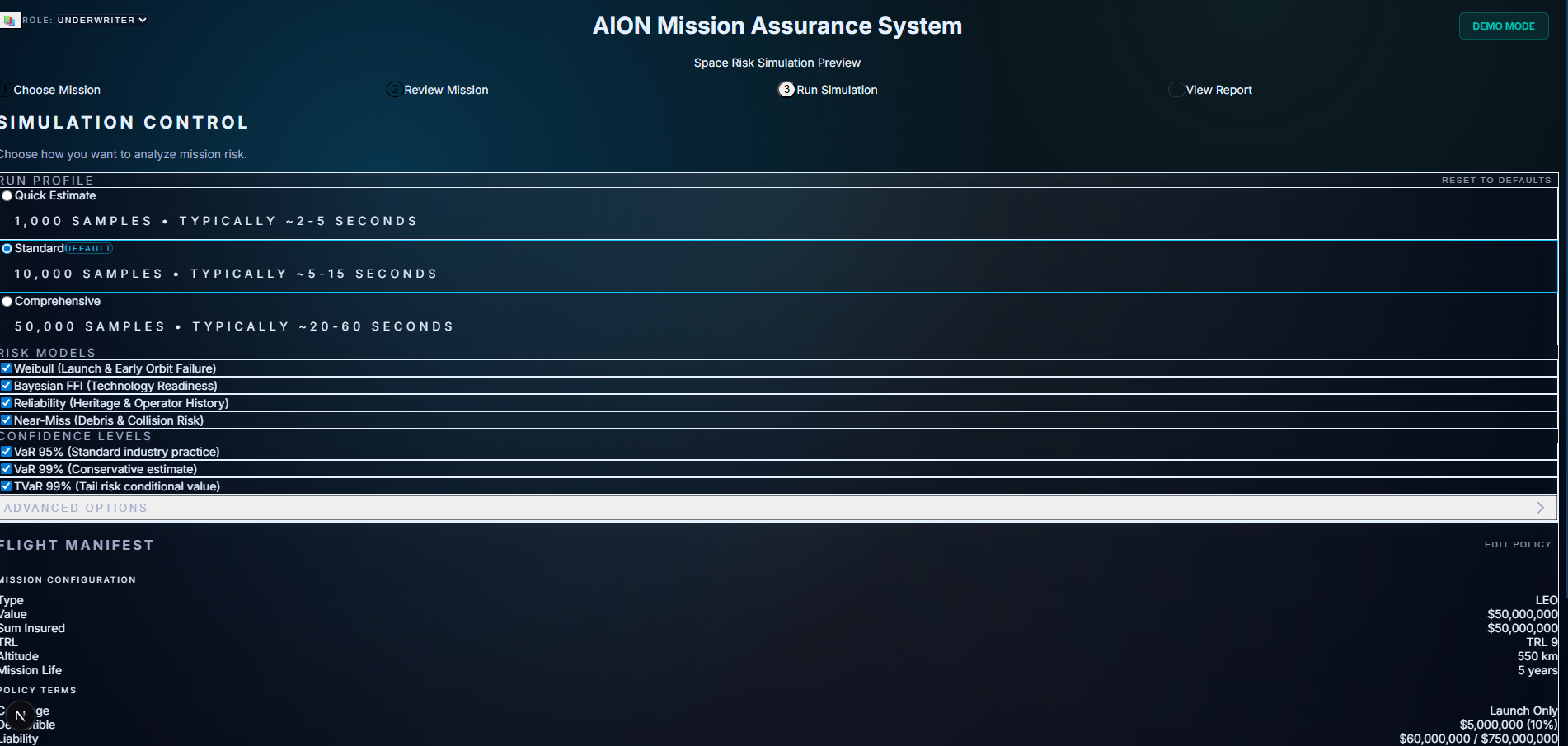

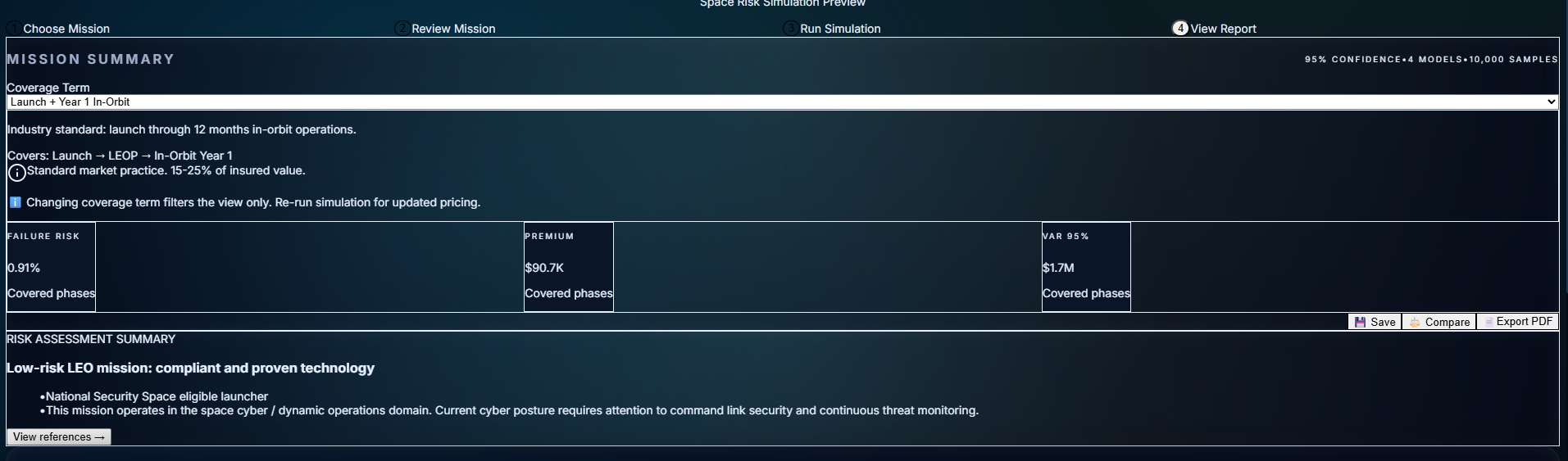

Module 1 gave you numbers. You could configure a mission, run the Monte Carlo model, and get a premium out the other side. It was fast. It was mathematically sound.

But in speciality insurance, numbers aren't enough.

A junior underwriter can run a model. A senior underwriter knows the model is only 10% of the story. They ask:

"Is this mission even legal under current UKSA regulations?"

"Have we seen a similar satellite configuration fail in LEO before?"

"Is this operator sanctioned?"

"Why is the premium 12.4%?"

If your system can't answer those questions—if it just says "trust me"—it's useless in the Lloyd's market. You need to defend every quote to capital providers, brokers, and regulators.

Module 1 turned AION into a risk brain.

Module 2 is the difference between a calculator and a senior underwriter.

It's the Context Engine: the piece that checks regulatory compliance, remembers past missions, explains every metric, and cites its sources. It turns AION from "here's a number" into "here's a defensible underwriting decision with regulatory backing and portfolio precedent."

Timeline: December 2–11, 2025 (9 days, ~180 hours) · Status: ✅ Frozen for V1

What I Built: The Context Engine

Module 2 delivers four core capabilities:

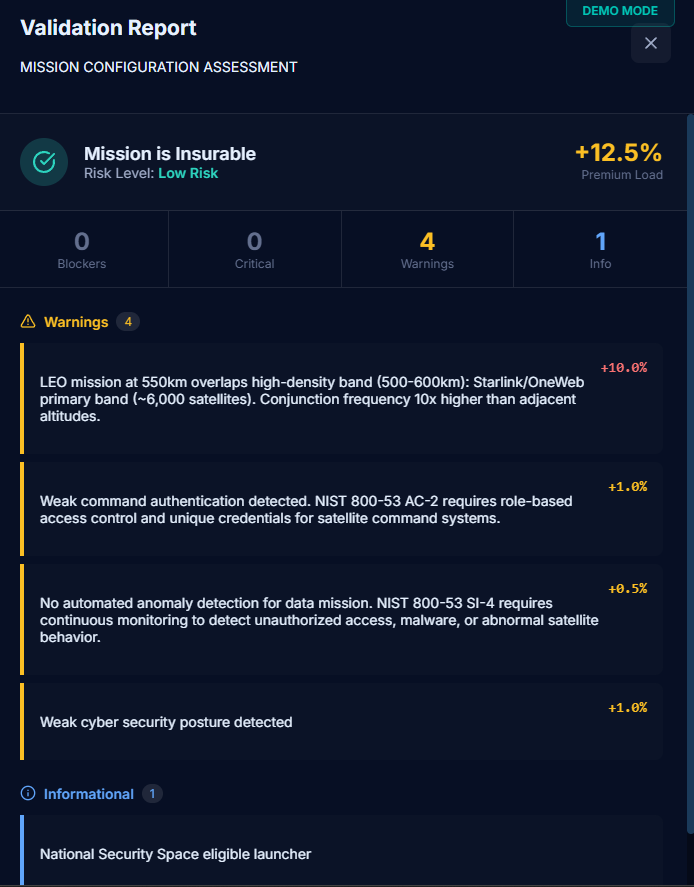

1. Regulatory Validation (22 Rules)

A rule engine that checks missions against international regulations, market access restrictions, technical safety requirements, and financial viability before pricing runs.

Traffic-light system (GREEN / AMBER / RED)

Each flag backed by citations to specific regulatory clauses

2. Hybrid Search + Glass-Box Narrative

A search engine that fuses:

vector similarity (semantic meaning)

BM25 keyword search

recency

reliability scoring

Every risk assessment comes with a narrative: bullet points, regulatory citations, and methodology references.

Not just "premium is 5.6%" but "here's why, here's the clause, here's the comparable mission".

3. Portfolio Memory (Similar Missions)

Every priced mission is saved as an embedding.

When a new submission comes in, the system finds similar missions by orbit, mass, heritage, and premium band.

"We've seen this before" → instant reference pricing and consistency.

4. Metric Explainability

Click any metric (failure_risk, premium, VaR, phase risks) and get:

the formula used

the inputs for this mission

comparable values from similar missions

links back to methodology docs

It takes AION from black box to glass box.

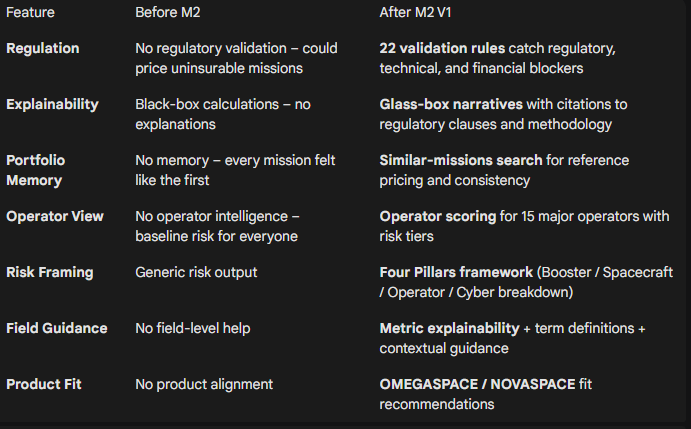

Before Module 2 vs After Module 2

Module 2 is now FROZEN for V1.

This isn't ongoing tinkering—it's a shipped, production-ready module with 30 passing tests, 80%+ coverage, and complete documentation.

Next step: build on top of it with Module 3.

How It Works: Inside the Context Engine

Building a glass box isn't just prompt engineering. It requires architecture that forces the AI to check its facts before it speaks.

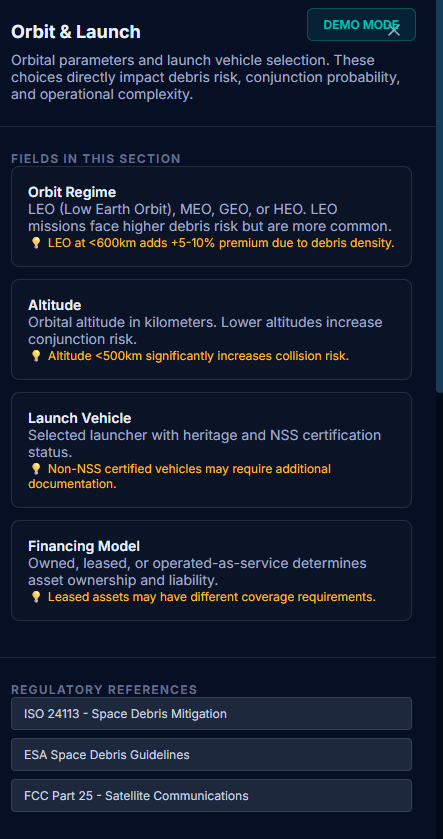

3a. Regulatory Validation: The Gatekeeper

Module 2's rule engine runs before any pricing logic. It applies 22 validation rules across four categories:

Regulatory compliance: ISO 24113 (25-year disposal), FCC 5-year deorbit, UKSA licensing

Market access: ITAR/EAR restrictions (China, India, Vietnam, Thailand)

Financial viability: Flags operators with <6 months funding runway

Sanctions & export control: LMA 3100A sanctions clause, US content thresholds

Each mission gets a traffic light (GREEN/AMBER/RED). RED flags block the quote entirely. AMBER flags add premium loadings with regulatory justification.

Example: A LEO constellation with a Chinese parent company triggers the Foreign Ownership Rule → AMBER flag → cites specific ITAR clause → adds 15% premium load.

This isn't an LLM guessing. It's deterministic logic checking real regulatory requirements. It stops you from wasting capital on uninsurable risk.

3b. Hybrid Search + Glass-Box Narrative

The biggest risk in AI for insurance isn't hallucination—it's opacity.

To solve this, I built a hybrid search engine that fuses:

Vector search (pgvector HNSW) for semantic similarity

BM25 keyword search (PostgreSQL full-text) for exact clause matches

Reciprocal Rank Fusion to merge results

Weighted scoring: 40% semantic + 30% keyword + 15% recency + 15% reliability

The corpus includes UKSA regulations, ISO standards, FCC rules, ESA guidelines, and internal methodology docs (Weibull parameters, pricing logic, Monte Carlo VaR).

Every risk narrative comes with citations. Not "this mission is risky because AI said so" but "this mission triggers Clause 5.1 of ISO 24113 because the deorbit plan exceeds 25 years."

The system doesn't just find close text—it finds the most trustworthy clauses for the specific mission configuration.

3c. Portfolio Memory: "We've Seen This Before"

Human underwriters rely on memory: "This looks like that Starlink launch from 2022."

Module 2 replicates this with a memory system.

After each pricing run, AION saves:

Mission summary (as vector embedding)

Key stats (orbit, mass, heritage, premium, risk band)

Coverage structure and operator profile

When a new mission arrives, the system searches for similar missions across three dimensions:

Semantic similarity (mission description and risk profile)

Configuration proximity (orbit, altitude ±100km, mass, launch vehicle)

Pricing band (premium within ±2%)

Result: Reference pricing for consistency. "We priced three similar LEO comms missions at 4.2–4.8%; this one at 4.5% is in line with portfolio precedent."

This creates an instant feedback loop: "We've seen this before." It ensures pricing consistency across the portfolio and prevents underwriters from starting from zero on every deal.

3d. Metric Explainability: No More "Trust Me"

In Module 1, metrics were black boxes. Module 2 makes them transparent.

Click any metric (failure_risk, premium, VaR, launch_phase_risk) and get:

The formula (e.g., Weibull β parameters, phase risk weightings)

The inputs used for this specific mission

Comparable values from similar missions

Links to methodology documentation

Example: Premium of 5.6% → Formula breakdown → Shows launch heritage factor (0.92), TRL adjustment (1.15), cyber gap loading (+0.8%) → Links to Weibull_Methodology.md and Heritage_Scoring.md

This turns the dashboard from a static display into a teaching tool for junior underwriters. They can see why the number is what it is, backed by methodology docs, not just LLM output.

Why It Matters

For Underwriters

Module 2 delivers defensible decisions under scrutiny.

When a broker pushes back on a quote, or a regulator asks why you declined coverage, you aren't guessing. You have:

Regulatory citations for every validation flag

Portfolio precedent from similar missions

Methodology docs backing every metric

A regulator-ready narrative with sources

It's the difference between "the model says no" and:

"This mission violates ISO 24113 Clause 5.1; here are three comparable missions we declined for the same reason, and here's the regulatory framework we're operating under."

For AION as a Product

Module 1 proved I could build risk models.

Module 2 proves I understand how underwriters actually think: regulatory frameworks, portfolio consistency, explainability under pressure.

This isn't a tech demo built in isolation. It's a tool designed with underwriting logic baked in from the start:

22 validation rules catching what experienced underwriters would flag manually

Four Pillars framework mirroring how Lloyd's syndicates structure risk

Similar-missions search replicating institutional memory

Glass-box narratives that can be sent directly to brokers

For an employer like Relm, this shows systems thinking at the domain level, not just coding ability.

For My Portfolio

Together, M1 + M2 demonstrate:

M1: I can build Monte Carlo risk engines, phase-based models, and pricing logic

M2: I can layer regulatory intelligence, RAG pipelines, and explainability on top

Combined: I can ship production-ready systems fast (9 days for M2) with real business value

This is what the space insurance industry needs: someone who can move between quant modelling, regulatory compliance, and product design without translation layers.

What's Next

Module 2 is frozen. The Context Engine is production-ready.

But AION isn't done. The full vision needs three more pieces.

Immediate: Broker Wizard Flow (In Progress)

Right now, the underwriter flow (M1 + M2) works end-to-end. Brokers need a different interface—simplified submission, portfolio view, and quote comparison.

I'm building the broker-facing flow now (NOVASPACE): a three-step submission wizard with cyber posture assessment, governance scoring, and product risk profiling. Cleaner inputs, batch quoting, and exportable deal sheets.

Next: Polish & Integration

Once the broker wizard is complete, I'll tighten the handoff between the two flows:

Broker submits → mission config + portfolio context

Underwriter reviews → validation flags + similar missions + M2 narrative

System recommends → GREEN / AMBER / RED with premium and terms

The goal is a fully joined-up submission-to-quote workflow across both interfaces.

Then: Module 3 — Parametric Engine

The final piece of the core stack: how do we pay claims?

Module 3 will handle:

Parametric trigger definitions (launch failure, orbit insertion, collision events)

Event stream integration (space weather, debris conjunctions, anomaly feeds)

Trigger clause justification (using M2's regulatory and technical context)

Claims automation once trigger conditions are met

At that point, AION becomes a full lifecycle space risk platform:

submission → validation → pricing → monitoring → claims.

Technical Stack & Key Metrics

Backend

FastAPI (Python 3.11)

PostgreSQL 15 + pgvector (HNSW vector index)

SQLAlchemy 2.0 + Alembic

OpenAI

text-embedding-3-small(embeddings)Anthropic Claude Sonnet (narrative generation)

Frontend

Next.js 14 + React 18

TypeScript 5+

Tailwind CSS 3+

Zustand (state management)

Module 2 Metrics

Timeline: 9 days (2–11 December 2025)

Effort: ~180 hours across 11 phases

Code: ~12,500 LOC (≈8K backend, 4.5K frontend)

Tests: 30 tests, 80%+ coverage, 100% pass rate

Rules: 22 validation rules

Data: 6

context_*database tablesAPI: 10+ dedicated Context Engine routes

Status: ✅ Frozen for V1